The Nvidia A100 80GB GPU has twice the memory of its predecessor, and with over 2TBps of memory bandwidth provides "unprecedented speed and performance" for AI and HPC applications.

"Achieving state-of-the-results in HPC and AI research requires building the biggest models, but these demand more memory capacity and bandwidth than ever before," said Nvidia vice president of applied deep learning research Bryan Catanzaro.

"The A100 80GB GPU provides double the memory of its predecessor, which was introduced just six months ago, and breaks the 2TB per second barrier, enabling researchers to tackle the world's most important scientific and big data challenges."

|

|

For example, training recommender models such as DLRM can be done three times more quickly. The additional memory also means larger models can be trained on a single server.

Conversely, multi-instance GPU technology means an A100 can be partitioned into up to seven GPU instances, each with 10GB of memory. This provides secure hardware isolation and maximises GPU utilisation for a variety of smaller workloads, the company said.

Performance improvements can also been seen in inferencing. The RNN-T speech recognition model delivers 1.25 times higher inference throughput in production.

HPC applications also benefit. Quantum Espresso, a materials simulation, achieved throughput gains of nearly 2x on a single A100 80GB.

"Speedy and ample memory bandwidth and capacity are vital to realising high performance in supercomputing applications," said Satoshi Matsuoka, director at Riken centre for Computational Science.

"The Nvidia A100 with 80GB of HBM2e GPU memory, providing the world's fastest 2TBps of bandwidth, will help deliver a big boost in application performance."

The Nvidia A100 80GB GPU is available in the Nvidia DGX A100 systems and the new Nvidia DGX Station A100.

Other vendors expected to announce systems with integrated four or eight A100 80GB GPUs include Atos, Dell Technologies, Fujitsu, Gigabyte, Hewlett Packard Enterprise, Inspur, Lenovo, Quanta and Supermicro, with delivery in the first half of 2021.

The Nvidia DGX Station A100 is described as "the world's only petascale workgroup server," delivering 2.5 petaflops of AI performance.

According to Nvidia, it is the only workgroup server with four of the latest Nvidia A100 Tensor Core GPUs fully interconnected with Nvidia NVLink, with up to 320GB of GPU memory.

Nvidia Multi-Instance GPU technology means one DGX Station A100 provides up to 28 separate GPU instances to run parallel jobs and support multiple users without impacting system performance.

"DGX Station A100 brings AI out of the data centre with a server-class system that can plug in anywhere," said Nvidia vice president and general manager of DGX systems Charlie Boyle.

"Teams of data science and AI researchers can accelerate their work using the same software stack as Nvidia DGX A100 systems, enabling them to easily scale from development to deployment."

For data centre workloads, the A100 80GB GPUs will be available in DGX A100 systems giving 640GB per system, allowing the use of larger datasets and models.

These DGX A100 640GB systems can also be integrated into the Nvidia DGX SuperPOD Solution for Enterprise.

The first DGX SuperPOD systems with DGX A100 640GB will include the UK's Cambridge-1 supercomputer for healthcare research, and the University of Florida HiPerGator AI supercomputer.

Nvidia DGX Station A100 and Nvidia DGX A100 640GB systems will be available this quarter through Nvidia partner network resellers worldwide. An upgrade option will be provided for Nvidia DGX A100 320GB customers.

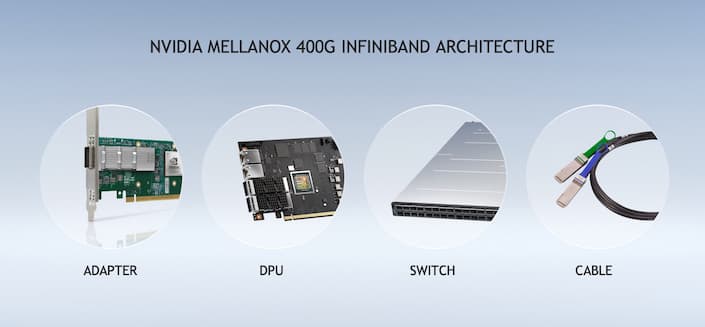

Nvidia Mellanox 400G InfiniBand provides "a dramatic leap in performance offered on the world's only fully offloadable, in-network computing platform," company officials said.

The seventh generation of Mellanox InfiniBand provides ultra-low latency and doubles data throughput with NDR 400Gbps and adds Nvidia In-Network Computing engines for additional acceleration.

Vendors including including Atos, Dell Technologies, Fujitsu, Inspur, Lenovo and Supermicro plan to add Nvidia Mellanox 400G InfiniBand to their enterprise and HPC products.

"The most important work of our customers is based on AI and increasingly complex applications that demand faster, smarter, more scalable networks," said Nvidia senior vice president of networking Gilad Shainer.

"The Nvidia Mellanox 400G InfiniBand's massive throughput and smart acceleration engines let HPC, AI and hyperscale cloud infrastructures achieve unmatched performance with less cost and complexity."

The Nvidia Mellanox NDR 400G InfiniBand offers 3x the switch port density and boosts AI acceleration power by 32 times, increases switch system aggregated bi-directional throughput five times to 1.64Pbps.

"Microsoft Azure's partnership with Nvidia Networking stems from our shared passion for helping scientists and researchers drive innovation and creativity through scalable HPC and AI. In HPC, Azure HBv2 VMs are the first to bring HDR InfiniBand to the cloud and achieve supercomputing scale and performance for MPI customer applications with demonstrated scaling to eclipse 80,000 cores for MPI HPC," said Microsoft head of product for Azure HPC Nidhi Chappell.

"In AI, to meet the high-ambition needs of AI innovation, the Azure NDv4 VMs also leverage HDR InfiniBand with 200Gbps per GPU, a massive total of 1.6Tbps of interconnect bandwidth per VM, and scale to thousands of GPUs under the same low-latency InfiniBand fabric to bring AI supercomputing to the masses. Microsoft applauds the continued innovation in Nvidia's Mellanox InfiniBand product line, and we look forward to continuing our strong partnership together."

The third-generation of the Nvidia Mellanox Sharp technology allows deep learning training operations to be offloaded and accelerated by the InfiniBand network, resulting in 32 times higher AI acceleration power.

Edge switches based on the Mellanox InfiniBand architecture, carry an aggregated bi-directional throughput of 51.2Tbps, while modular switches will carry an aggregated bi-directional throughput of 1.64Pbps, which is five times that of the previous generation.

Products based on Nvidia Mellanox NDR 400G InfiniBand are expected to become available in sample form in the second quarter of 2021.