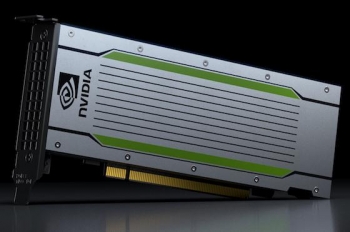

The TensorRT Hyperscale Inference Platform is based on Nvidia's Tesla T4 GPU, which the company claims is the most advanced inference GPU. It is an order of magnitude faster than the Tesla P4 in terms of FP16 TFLOPS (65 vs 5.5 TFLOPS), and is between 20 and 36 times faster when running various inferencing workloads.

Version 5 of the TensorRT inference optimiser and runtime software includes the TensorRT Inference Server, which allows multiple models to scale across multiple GPUs.

The Inference Server is made available as a free, ready-to-run container that integrates with Kubernetes and Docker, and fits into DevOps environments.

|

|

"AI is becoming increasingly pervasive, and inference is a critical capability customers need to successfully deploy their AI models, so we're excited to support Nvidia's Turing Tesla T4 GPUs on Google Cloud Platform soon," said Google Cloud product manager Chris Kleban.

Hardware manufacturers supporting the platform include Cisco, Dell EMC, Fujitsu, HPE, IBM and Supermicro.

"Cisco's UCS portfolio delivers policy-driven, GPU-accelerated systems and solutions to power every phase of the AI lifecycle. With the Nvidia Tesla T4 GPU based on the Nvidia Turing architecture, Cisco customers will have access to the most efficient accelerator for AI inference workloads, gaining insights faster and accelerating time to action," said Cisco data centre group vice-president of product management, Kaustubh Das.

"Dell EMC is focused on helping customers transform their IT while benefitting from advancements such as artificial intelligence. As the world's leading provider of server systems, Dell EMC continues to enhance the PowerEdge server portfolio to help our customers ultimately achieve their goals," said Dell EMC senior vice-president of product management and marketing for servers and infrastructure systems, Ravi Pendekanti.

"Our close collaboration with Nvidia and historical adoption of the latest GPU accelerators available from their Tesla portfolio play a vital role in helping our customers stay ahead of the curve in AI training and inference."